US Congress Passes 'Take It Down' Act: A Historic Law to Fight Revenge Porn and AI Deepfakes

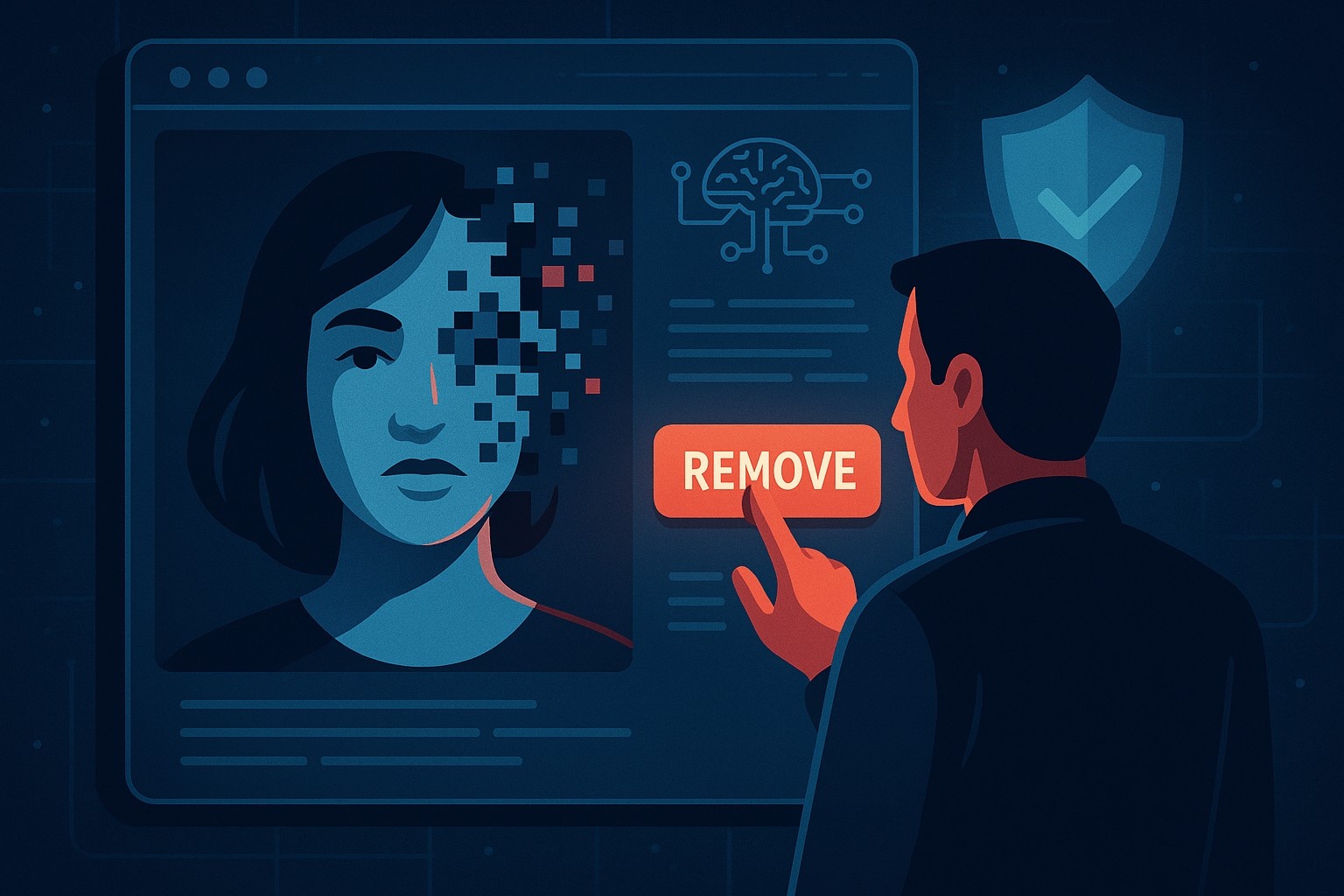

In a landmark moment for digital privacy and online safety, the United States Congress has passed the “Take It Down” Act — a powerful, bipartisan piece of legislation aimed at criminalizing revenge porn and regulating the spread of AI-generated explicit deepfake content.

The rise of artificial intelligence, while promising in many ways, has also opened the door to serious misuse — especially in the creation and distribution of non-consensual intimate imagery, commonly known as revenge porn. What was once a painful personal violation has now evolved into something far more widespread and high-tech: deepfakes that replicate a person’s face and body without their knowledge or consent.

With the passage of this bill, lawmakers are sending a strong message: digital exploitation will not be tolerated, and there will be real consequences for those who use technology to harm others.

What Is the 'Take It Down' Act?

The “Take It Down” Act is designed to give victims of revenge porn and AI deepfakes the tools to reclaim their dignity. Here’s what the bill does:

-

Makes It a Federal Crime to Share Intimate Images Without Consent

Anyone found guilty of sharing or threatening to share non-consensual sexual images — whether real or AI-generated — could face:

- Up to two years in prison

- Additional penalties if the victim is a minor

- Forces Platforms to Remove Harmful Content Quickly Social media platforms, websites, and file hosts will now be legally obligated to remove explicit content within 48 hours of being notified by a verified victim. Failure to comply may result in government penalties and increased liability under the Federal Trade Commission (FTC).

- Creates a National Takedown System The act proposes a centralized portal, much like the existing "Take It Down" tool developed by the National Center for Missing & Exploited Children (NCMEC), where victims can file requests across multiple platforms in one go.

Why This Bill Was Needed

The Problem Is Bigger Than Most Realize

While revenge porn has been a known issue for years, AI deepfakes have rapidly escalated the threat. What used to require sophisticated editing skills can now be done with a smartphone and a few clicks.

Victims are often:

- Women and teenage girls

- Celebrities, influencers, and public figures

- Everyday people targeted by stalkers, ex-partners, or online trolls

These images spread like wildfire, often going viral before the victim even knows they exist. And until now, the law didn’t always have their back.

Real Lives, Real Harm: The Case of Elliston Berry

The urgency behind this legislation was underscored by the heartbreaking story of Elliston Berry, a 14-year-old girl from Texas. A classmate used an AI app to generate explicit photos of her, which were then circulated among students.

Despite the images being fake, the psychological damage was real. Elliston and her family struggled for months to get the content removed from social media and pornographic websites. There was no clear law that protected her. No obvious consequence for the person responsible.

Her story sparked outrage across the country — and helped convince lawmakers that the time for action was now.

A Rare Bipartisan Victory

In today’s politically divided America, it’s rare to see both Democrats and Republicans agreeing on major legislation. But this bill brought people together.

The “Take It Down” Act was introduced by:

- Senator Ted Cruz (R-Texas)

- Senator Amy Klobuchar (D-Minnesota)

It gained widespread bipartisan support and passed through both chambers of Congress with overwhelming approval.

Even First Lady Melania Trump made a surprise return to the national spotlight by championing the bill. At a press event on Capitol Hill, she called the issue of AI-generated abuse “a silent epidemic” and urged lawmakers to “stand with the victims.”

What Tech Companies Are Saying

Big tech platforms have expressed support for the bill, acknowledging the need for tighter protections.

Meta (formerly Facebook) issued a statement through spokesperson Andy Stone:

“Having an intimate image — real or AI-generated — shared without your consent is devastating. We fully support tools and legislation to prevent and remove this kind of abuse.”

Other companies, including TikTok, Reddit, and OnlyFans, are expected to update their community guidelines and internal moderation policies to stay compliant with the new law.

Not Without Controversy

While the bill has received praise, some organizations have raised free speech and enforcement concerns.

The Electronic Frontier Foundation (EFF), a nonprofit focused on digital rights, warned that the law’s broad language could lead to over-censorship. They fear:

- Legitimate artistic or journalistic content could be removed mistakenly

- The takedown system could be abused for false claims or political censorship

- Smaller platforms may struggle to comply with rapid removal timelines

The balance between protecting victims and preserving online freedom will need to be watched closely as the law rolls out.

What Happens Next?

The bill has passed Congress and is now awaiting the President’s signature to become federal law. Once signed:

- The FTC will begin developing enforcement rules and guidance

- Platforms will receive a compliance deadline to build out takedown systems

- Victims will have access to legal tools and support resources they’ve never had before

State and local governments are also expected to follow with parallel laws and additional protections, creating a nationwide framework for digital safety.

What This Means for Everyday People

If you’re a content creator, influencer, parent, or just someone who exists online — this law matters to you.

Here’s how it protects you:

- If someone uses AI to fake an intimate photo or video of you, you have a legal right to get it taken down

- If an ex-partner or troll shares a private image, they can now face prison time

- Platforms must act fast — no more endless waiting or ignored requests

Final Thoughts: A Turning Point for Digital Dignity

The “Take It Down” Act is more than just another internet bill. It’s a statement about human dignity in the digital age.

In a world where AI tools can create convincing lies, where private moments can become viral ammunition, and where teens like Elliston Berry are targeted for simply existing — this law is a shield.

It’s not perfect. It may need tweaks. But it’s a massive step in the right direction.

Because in the end, no one should have to beg to have their body, identity, or humanity respected online.